Hosting multiple sites or applications using Docker and NGINX reverse proxy with Letsencrypt SSL

Hosting multiple sites or applications using Docker and NGINX reverse proxy with Letsencrypt SSL

In this article, you’ll find instructions for how to set up multiple websites with SSL on one host easily using Docker, Docker Compose, nginx, and Let’s Encrypt.

Nginx proxy

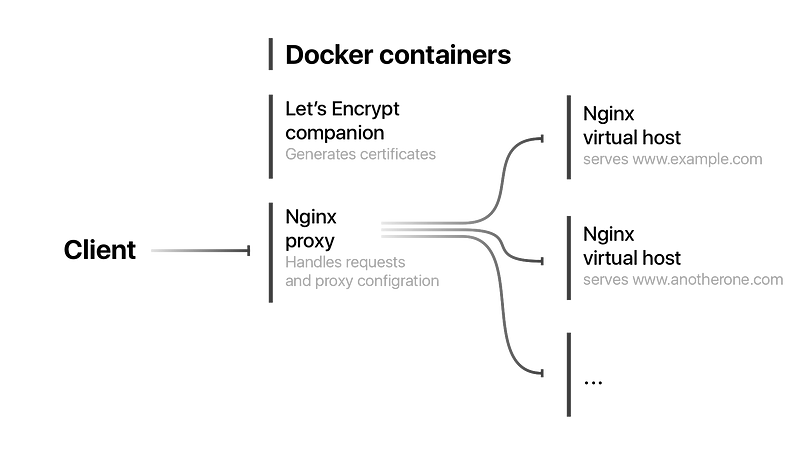

To be able to host multiple websites on one machine we need a proxy server that will handle all requests and direct them to the correct nginx server instances running in Docker containers. To achieve that we will use jwilder/nginx-proxy image for Docker. It will automatically configure a proxy for our nginx containers when we launch them.

nginx-proxy has a couple things happening:

- Its ports 80 and 443 are forwarded to the host, making it Internet-facing. No other containers we run on this machine will need their ports forwarded, all communication from and to the outside will be proxied through here — hence “reverse proxy”.

- Various NGINX configuration directories are mounted as named volumes to keep them persistent on the host system. Those volumes are defined further down in the file.

/var/run/docker.sockfrom the host is mounted. This allows the proxy to listen in to other containers starting and stopping on the host, and configure NGINX forwarding as needed. Containers need to present their desired hostnames and ports as environment variables that the proxy can read - more on that further below.- Finally, the container is assigned to a

proxyexternal network, which is described below.

NGINX reverse proxy and SSL

The NGINX reverse proxy is the key to this whole setup. Its job is to listen on external ports 80 and 443 and connect requests to corresponding Docker containers, without exposing their inner workings or ports directly to the outside world. Additionally, with the SSL companion container the proxy also automatically redirects all HTTP requests to HTTPS and handles SSL encryption for all traffic, including certificate management.

Let’s Encrypt companion

For automatic certificate management, we will use jrcs/letsencrypt-nginx-proxy-companion image. It will watch for containers that we launch and do everything needed along with the nginx proxy to enable SSL on our nginx virtual host containers.

letsencrypt is a companion container to nginx-proxy that handles all the necessary SSL tasks - obtaining the required certificates from Let’s Encrypt and keeping them up-to-date, and auto-configuring nginx-proxy to transparently encrypt all proxied traffic to and from application containers.

It’s connected to nginx-proxy by sharing its volumes (volumes-from: directive). It also listens in on the host’s /var/run/docker.sock to be notified when application containers are started and to get the information from them to obtain the necessary SSL certificates.

Note that this container doesn’t need to be put in the external network — it gets by only using the shared volumes and Docker socket, and never needs to talk to the outside world or another container directly.

External network

Last but not least, there’s the external network. To understand why you might need it, you need to know how docker-compose handles networks by default:

- For every application that is run using its own

docker-compose.yml, Compose creates a separate network. All containers within that application are assigned only to that network and can talk to each other and to the Internet. - We want to deploy multiple applications on this server using Compose, each with their own, and proxy them all to the outside world via our

nginx-proxycontainer. - No other container can access containers within a default network created by docker-compose, only those inside the application’s own

docker-compose.yml. This makes life difficult fornginx-proxy. - To work around this, we create a single network outside of Compose’s infrastructure and place our

nginx-proxycontainer in that network. To do this, we need to define this network asexternalin thedocker-compose.yml- this way Compose will not try to create the network itself, but just assign the containers it creates to the existing outside network. - If we try to run this as it is, Compose will error out telling us the external network doesn’t exist.

We need to initially create the proxy network manually with:

sudo docker network create nginx-proxyNow we can run our proxy container and SSL companion:

sudo docker-compose up -dAnd continue with deploying at least one application behind the proxy to see that it actually works.

Main Docker Compose file

Let’s start by creating our main Docker Compose file, that will launch our nginx proxy and Let’s Encrypt companion containers. In a separate folder called proxy create file docker-compose.yml with contents below:

version: '3' # Version of the Docker Compose file format

services:

nginx-proxy:

image: jwilder/nginx-proxy:alpine

restart: "always" # Always restart container

ports:

- "80:80" # Port mappings in format host:container

- "443:443"

networks:

- nginx-proxy # Name of the etwork these two containers will share

labels:

- "com.github.jrcs.letsencrypt_nginx_proxy_companion.nginx_proxy" # Label needed for Let's Encrypt companion container

volumes: # Volumes needed for container to configure proixes and access certificates genereated by Let's Encrypt companion container

- /var/run/docker.sock:/tmp/docker.sock:ro

- "nginx-conf:/etc/nginx/conf.d"

- "nginx-vhost:/etc/nginx/vhost.d"

- "html:/usr/share/nginx/html"

- "certs:/etc/nginx/certs:ro"

letsencrypt-nginx-proxy-companion:

image: jrcs/letsencrypt-nginx-proxy-companion

restart: always

container_name: letsencrypt-nginx-proxy-companion

volumes:

- "/var/run/docker.sock:/var/run/docker.sock:ro"

- "nginx-conf:/etc/nginx/conf.d"

- "nginx-vhost:/etc/nginx/vhost.d"

- "html:/usr/share/nginx/html"

- "certs:/etc/nginx/certs:rw"

depends_on: # Make sure we start nginx proxy container first

- nginx-proxy

networks:

nginx-proxy: # Name of our shared network that containers will use

volumes: # Names of volumes that out containers will share. Those will persist on docker's host machine.

nginx-conf:

nginx-vhost:

html:

certs:COPYNow, if we’ll execute the following command:

docker-compose up -dDocker Compose will look for a file named docker-compose.yml in the current folder and launch all services (containers) that are described in there. In this case it will launch nginx proxy and Let’s Encrypt companion containers. Also, because we trying to launch it for the first time it will build images first.

Flag -d means that we want to run our containers in the background (detached mode).

Use the following command to check containers status:

docker psYou should see two containers with Up status.

Nginx virtual host

Now, we will launch our first nginx container that will securely serve content through the proxy to the client.

In a new folder, called example.com (replace with the name of your domain) create a new docker-compose.yml file. In a subfolder named www place contents of your website. Also, create a subfolder called nginx with two files called Dockerfile and default.conf.

Structure of example.com folder should look like this:

- www folder with your website’s content

- index.html

- etc…

==============

- nginx

- Dockerfile

- default.conf

==============

- docker-compose.yml

Dockerfile:

FROM nginx:alpine

COPY ./nginx/default.conf /etc/nginx/conf.d/default.conf # Copy nginx configuration file

COPY ./www/ /usr/share/nginx/html/ # Copy website contentsCOPYdefault.conf:

server {

listen 80 default_server;

listen 443 ssl; server_name example.com; root /usr/share/nginx/html/;

index index.html; location / {

try_files $uri $uri/ =404;

}

}COPYdocker-compose.yml:

version: '3'

services:

nginx:

container_name: example-nginx

image: example-nginx

restart: always

build:

context: ./

dockerfile: ./nginx/Dockerfile

environment:

- VIRTUAL_HOST=example.com # Enviroment variable needed for nginx proxy

- LETSENCRYPT_HOST=example.com # Enviroment variables needed for Let's Encrypt companion

- LETSENCRYPT_EMAIL=admin@example.com

expose:

- "80" # Expose http port

- "443" # along with https port

networks:

- nginx-proxy # Connect this container to network named nginx-proxy, that will be described below

networks:

nginx-proxy:

external:

name: proxy_nginx-proxy # Reference our network that was created by Docker Compose when we launched our two main containers earlier. Name generated automaticaly. Use `docker network ls` to list all networks and their names.COPYDon’t forget to replace example.com in all files with your domain name.

Now everything ready for building and launching our container:

docker-compose up -dNow you have three up and running Docker containers: nginx proxy for handling requests and configuring proxies for our virtual hosts, Let’s Encrypt companion for generating SSL certificates and one virtual host container that will serve to users content.

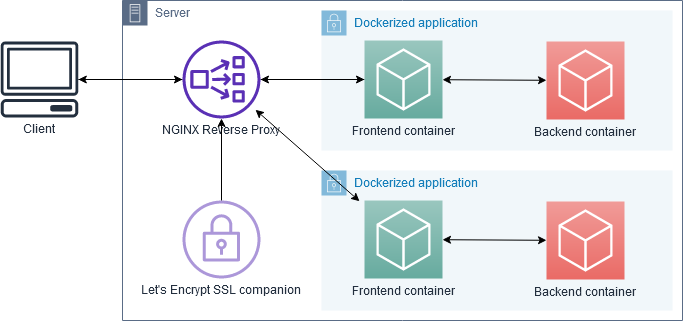

Multi-container applications

Most applications you might want to deploy consist of several containers — say, a database, a backend service that does some processing, and a frontend. Of those containers, you may only want the frontend to be accessible from the outside, but the three should be able to talk to each other internally. A docker-compose.yml for such a setup might look something like:

version: '3'services: frontend:

image: frontend-image:latest

expose:

- "3000"

environment:

- VIRTUAL_HOST=awesomeapp.domain.tld

- VIRTUAL_PORT=3000

- LETSENCRYPT_HOST=awesomeapp.domain.tld

- LETSENCRYPT_EMAIL=email@somewhere.tld

- BACKEND_HOST=backend

networks:

- proxy

- app backend:

image: backend-image:latest

environment:

- DB_HOST=db

networks:

- app db:

image: postgres:11

volumes:

- /opt/app-db:/var/lib/postgresql/data

networks:

- appnetworks:

proxy:

external:

name: nginx-proxy

app:Things of note here:

- Only the

frontendcontainer is put into theproxyexternal network because it’s the only one that needs to talk tonginx-proxy. It’s also the only one with the environment variables for proxy configuration. The containers that are only used internally in the app don’t need those backendanddbcontainers are put in a separateappnetwork, that is defined additionally undernetworks. This serves in place of a default network that Compose would normally create for all containers defined in thedocker-compose.yml- except once you define any network manually, the default network doesn’t get created anymore, and so ourbackendanddbcontainers would be completely isolated. With theappnetwork, they can talk to each other and tofrontendnormally.

As you can see, the setup is not very complicated. The only things to add to your normal docker-compose.yml of any multi-container app are the networks and environment variables for the frontend container.

Multiple proxied frontend containers

You can have multiple containers within a single Compose application that you want to make available via the proxy — for example, a public frontend and an administrative back office. You can configure as many containers inside one docker-compose.yml with the proxy network and necessary environment variables as you need. The only limitation is that every container needs to run on a separate VIRTUAL_HOST.

For example, you could run your public frontend at awesomeapp.domain.tld and the admin backend to that at admin.awesomeapp.domain.tld.

That’s it!

In no time you’ll be able to access your website securely!

To launch another virtual host, just repeat steps starting from Nginx Virtual Host paragraph.

Rebuilding the container’s image

To rebuild docker images described in compose file, for example, if you changed the website’s content, use the following command:

docker-compose buildIt will rebuild all images of services that are described in docker-compose.yml file located in the current folder.

What if something isn’t working

If you have problems launching containers, try to launch them without -d flag, this way you’ll be able to see the output of the container’s service (its stdout and stderr).

To see logs of already running container you can use the following command:

docker logs CONTAINER_IDTo find CONTAINER_ID look at the output of the following command:

docker psSNI

The ability to serve content from different domains using different certificates from one host is possible thanks to SNI. You can read more about this technology on Wikipedia. It’s widely supported technology in browsers and other web clients. You can check support by popular web browsers here.

Github project

You can find an example project on GitHub. Have fun!